Introduction

1.1 What are Neural Networks?

Neural networks are computational models inspired by the structure and functioning of the human brain. They are a type of machine learning algorithm that can learn and make predictions based on patterns in data. Neural networks consist of interconnected nodes called neurons, which work together to process and analyze information.

Imagine you have a magical puzzle-solving machine. It can look at a puzzle, analyze its pieces, and figure out how they fit together to form a complete picture. Neural networks are like these puzzle-solving machines, but instead of puzzles, they solve problems by analyzing data.

1.2 Components of Neural Networks: Neurons, Layers, and Activation Functions

Neural networks are made up of three essential components: neurons, layers, and activation functions.

Neurons: Neurons are the building blocks of neural networks. They receive input data, perform calculations, and produce an output. Neurons are connected to each other through pathways called connections or edges.

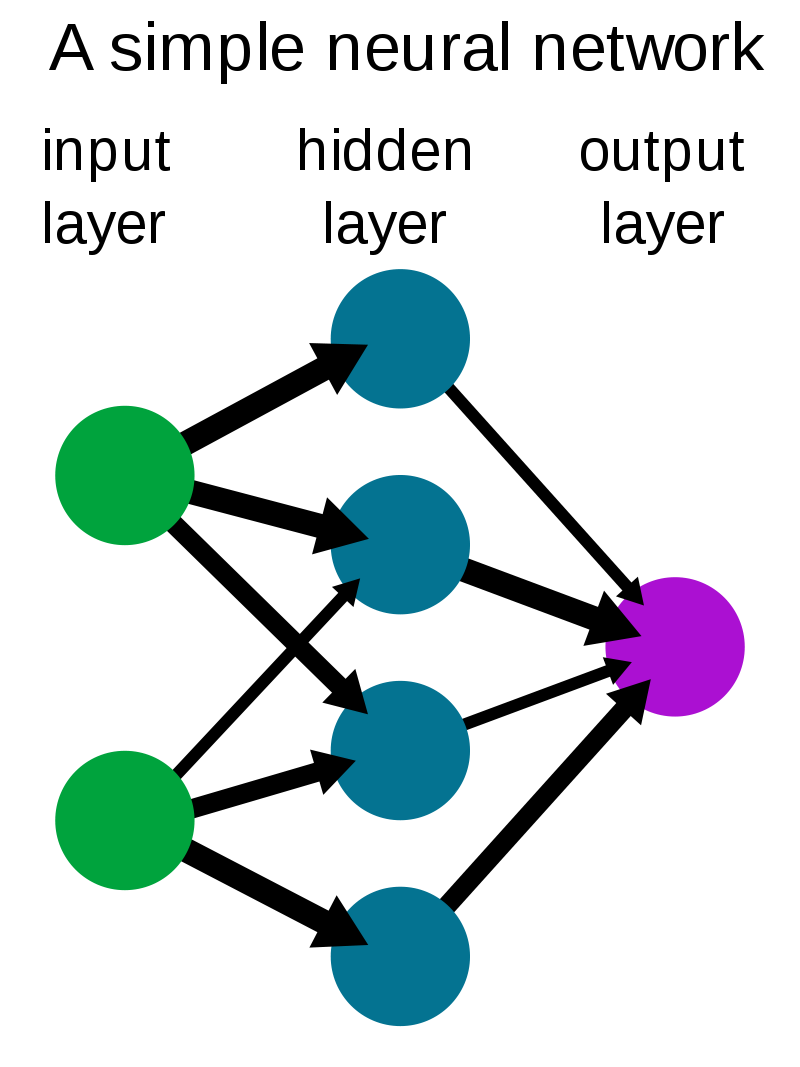

Layers: Neurons are organized into layers. A neural network typically consists of an input layer, one or more hidden layers, and an output layer. The input layer receives the initial data, the hidden layers perform intermediate calculations, and the output layer produces the final prediction or result.

Activation Functions: Activation functions determine the output of a neuron. They introduce non-linearity into the neural network, allowing it to learn complex relationships between inputs and outputs. Activation functions can be compared to magical filters that transform the information passing through them.

To understand this, imagine you have a secret code book that can convert plain text into a secret language. Each page of the code book has a different filter, and when you pass your message through the filter, it transforms into a secret code. Activation functions work similarly, transforming the neuron's input into a specific output.

1.3 Introduction to Deep Learning and its Advantages

Deep learning is a subfield of machine learning that focuses on training deep neural networks with multiple hidden layers. Deep neural networks can learn and represent complex patterns in data, making them powerful tools for solving challenging problems.

Think of deep learning as a magical tool that can solve the most complicated puzzles. It can analyze vast amounts of data, discover hidden patterns, and make accurate predictions.

The advantages of deep learning are like the superpowers of a superhero. It can understand and analyze images, recognize speech, and even play games better than humans! Deep learning allows computers to learn and improve with experience, just like you get better at solving puzzles the more you practice.

Backpropagation

2.1 Understanding the Learning Process in Neural Networks

In neural networks, learning is a process where the network adjusts its parameters to improve its performance. It's like training a magical creature to solve puzzles better and better with practice. One key part of this learning process is feedback, where the network learns from its mistakes and adjusts its behavior accordingly.

Imagine you have a puzzle-solving creature that can analyze pieces and put them together. Initially, it may not solve the puzzle correctly, but it receives feedback on which pieces it placed incorrectly. It learns from these mistakes and tries to improve its puzzle-solving skills.

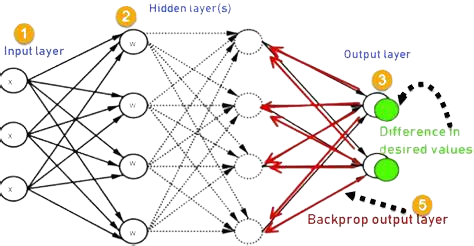

2.2 Gradient Descent and Backpropagation Algorithm

Gradient descent is a magical optimization technique that helps neural networks improve their performance. It's like giving your puzzle-solving creature a map that guides it towards solving the puzzle faster. Gradient descent helps the network find the best values for its parameters by minimizing a special mathematical function called the loss function.

The backpropagation algorithm is the secret behind how neural networks use gradient descent. It's like a magical guide that tells the puzzle-solving creature which pieces it should adjust and by how much. Backpropagation calculates the gradients (or slopes) of the loss function with respect to the network's parameters, helping the network understand how changes in the parameters affect the puzzle-solving process.

2.3 Updating Weights and Bias Terms

In neural networks, each connection between neurons has a weight associated with it. Think of it as a magical strength assigned to the connection. Additionally, each neuron has a bias term, which is like a magical boost that helps the neuron make decisions.

During the learning process, the network updates the weights and bias terms based on the information provided by the backpropagation algorithm. It's like adjusting the puzzle-solving creature's skills and abilities based on the feedback it receives. The network makes small tweaks to its weights and bias terms, guided by the gradients calculated through backpropagation, to improve its performance.

Overview of Deep Learning Frameworks

3.1 Introduction to TensorFlow

TensorFlow is a popular deep learning framework that helps you build and train neural networks. It's like a special toolbox filled with magical tools that make it easier to create powerful models. TensorFlow provides a wide range of functions and operations to perform complex computations efficiently.

Imagine you have a set of magical tools that help you build different kinds of structures. TensorFlow is just like that! It provides pre-built components that you can use to create neural networks without starting from scratch. It's like having a set of Lego blocks that you can assemble to build amazing models.

3.2 Introduction to PyTorch

PyTorch is another deep learning framework that has gained popularity among researchers and developers. It's like a creative canvas that allows you to express your ideas and build unique models. PyTorch emphasizes flexibility and ease of use, making it a great choice for experimentation and prototyping.

Think of PyTorch as a magical drawing board where you can create and design your own models. It provides a friendly environment where you can express your creativity and explore different ideas. PyTorch gives you the freedom to experiment and see the results of your creations.

3.3 Popular Deep Learning Libraries and their Features

In addition to TensorFlow and PyTorch, there are other popular deep learning libraries that offer unique features and advantages. These libraries provide different tools and functionalities to support various tasks in deep learning.

For example, Keras is a user-friendly library that sits on top of TensorFlow and simplifies the process of building neural networks. It's like a magical translator that understands your instructions and converts them into a language that TensorFlow can understand.

Another popular library is scikit-learn, which provides a wide range of machine learning algorithms, including deep learning. It's like a treasure chest filled with magical algorithms that you can use for different tasks. Scikit-learn simplifies the implementation of deep learning models and provides tools for evaluation and model selection.

Implementing a Basic Neural Network

4.1 Setting up the Development Environment

Setting up the development environment involves preparing your computer with the necessary tools and software to build and run neural networks. This typically includes installing programming languages like Python, deep learning libraries like TensorFlow, and other dependencies.

Imagine you're a chef who wants to cook a delicious meal. Before you start cooking, you need to make sure your kitchen is equipped with all the necessary ingredients and cooking utensils. Similarly, setting up the development environment is like preparing your kitchen with the right tools and ingredients for building neural networks.

4.2 Building a Neural Network using Python and TensorFlow

Building a neural network involves creating the structure of the network and defining its various components such as layers, activation functions, and optimization algorithms. Python, a popular programming language, is commonly used for implementing neural networks, along with deep learning libraries like TensorFlow.

Think of building a neural network as designing a robot. You have to decide how many arms, legs, and sensors it should have. In the same way, you design a neural network by deciding how many layers it should have, what type of activation functions to use, and how the different layers should be connected.

4.3 Training and Evaluating the Neural Network

Training a neural network involves teaching it to make accurate predictions by presenting it with labeled training data. The network adjusts its internal parameters (weights and biases) during the training process to minimize the difference between its predictions and the actual labels. Evaluation is done to assess the performance of the trained network on new, unseen data.

Imagine you're training a pet dog to perform tricks. You show it different tricks and reward it when it performs correctly. Similarly, you train a neural network by showing it examples and providing the correct answers. The network learns from these examples and improves its ability to make accurate predictions.

4.4 Fine-tuning and Improving Model Performance

After training the neural network, you can fine-tune it to further improve its performance. This involves adjusting hyperparameters, such as learning rate and regularization strength, and exploring different architectural changes to enhance the network's accuracy and generalization capability.

Think of fine-tuning a neural network as tweaking a musical instrument. You adjust the strings, keys, and other settings to produce the best sound. Similarly, you fine-tune a neural network by making small adjustments to optimize its performance and make it more reliable.

Summary

5.1 Key Concepts of Neural Networks and Deep Learning

Neural networks and deep learning involve the use of artificial intelligence algorithms that mimic the human brain to solve complex problems. Key concepts include:

- Neurons: These are the basic building blocks of neural networks. They receive inputs, perform computations, and generate outputs.

- Layers: Neurons are organized into layers, with each layer processing and transforming the information received from the previous layer.

- Activation Functions: Activation functions determine the output of a neuron and introduce non-linearity into the network.

- Deep Learning: Deep learning refers to the use of neural networks with multiple layers. It enables the network to learn and extract complex patterns from data.

Imagine you have a team of superheroes with special powers. Each superhero has their own unique ability, like super strength or the power to fly. Together, they work as a team to solve challenging missions. In neural networks, neurons are like superheroes, each with its own special function. They work together in layers, using their abilities to process information and accomplish tasks.

5.2 Practical Applications and Limitations

Neural networks and deep learning have found practical applications in various fields. Some examples include:

- Image and Speech Recognition: Neural networks can analyze images and identify objects or recognize speech patterns, enabling technologies like facial recognition or voice assistants.

- Natural Language Processing: Neural networks can understand and generate human language, making language translation and chatbots possible.

- Medical Diagnosis: Deep learning can assist in medical diagnosis by analyzing medical images or patient data to detect diseases or predict treatment outcomes.

However, it's important to consider the limitations of neural networks. They require large amounts of data for training and can be computationally expensive. They may also suffer from overfitting, where the network performs well on training data but fails to generalize to new data.

Imagine you have a super-powered microscope that can help you identify tiny bugs. It works great when you have a lot of bugs to observe, but if you only have a few, it might not work as well. Similarly, neural networks need a lot of data to learn effectively, and sometimes they may focus too much on the details and miss the big picture.

5.3 Importance of Deep Learning in Machine Learning

Deep learning is a crucial component of machine learning, a branch of artificial intelligence. It enables machines to learn from large amounts of data and make accurate predictions or decisions. Deep learning has several advantages:

- Automatic Feature Extraction: Deep learning models can automatically learn and extract features from raw data, reducing the need for manual feature engineering.

- High Accuracy: Deep learning models have achieved state-of-the-art performance in various tasks, surpassing traditional machine learning algorithms.

- Versatility: Deep learning can be applied to a wide range of domains, from computer vision to natural language processing, enabling advancements in various fields.

Think of deep learning as a super-smart robot that can learn from experience. It doesn't need to be explicitly programmed for every task; instead, it learns on its own by observing and interacting with the world, becoming better and better at solving problems.

5.4 Next Steps in Deep Learning Journey

To further explore the world of deep learning, you can take several steps:

- Advanced Architectures: Explore advanced neural network architectures like convolutional neural networks (CNNs) for image processing or recurrent neural networks (RNNs) for sequence data.

- Transfer Learning: Learn about transfer learning, where pre-trained models are used as a starting point for new tasks, saving time and resources.

- Real-World Projects: Engage in real-world projects where you can apply deep learning techniques to solve practical problems and gain hands-on experience.

Imagine you're a scientist with a lab full of cool gadgets. You've already built some amazing machines, but there's still so much more to discover! You can experiment with new inventions, use existing ones as a base for new projects, and even tackle real-world challenges using your knowledge and skills.